Tzach's Portfolio

AI Inference Acceleration on Embdedded GPU

January 1, 2021 (5y ago)

GPU Optimization

It’s outstanding to see how AI algorithms acceleration turns over the whole software!On this project I have accelerated Ears Localization on a SoC:

Arm HiKey970 Board

Main Challenge

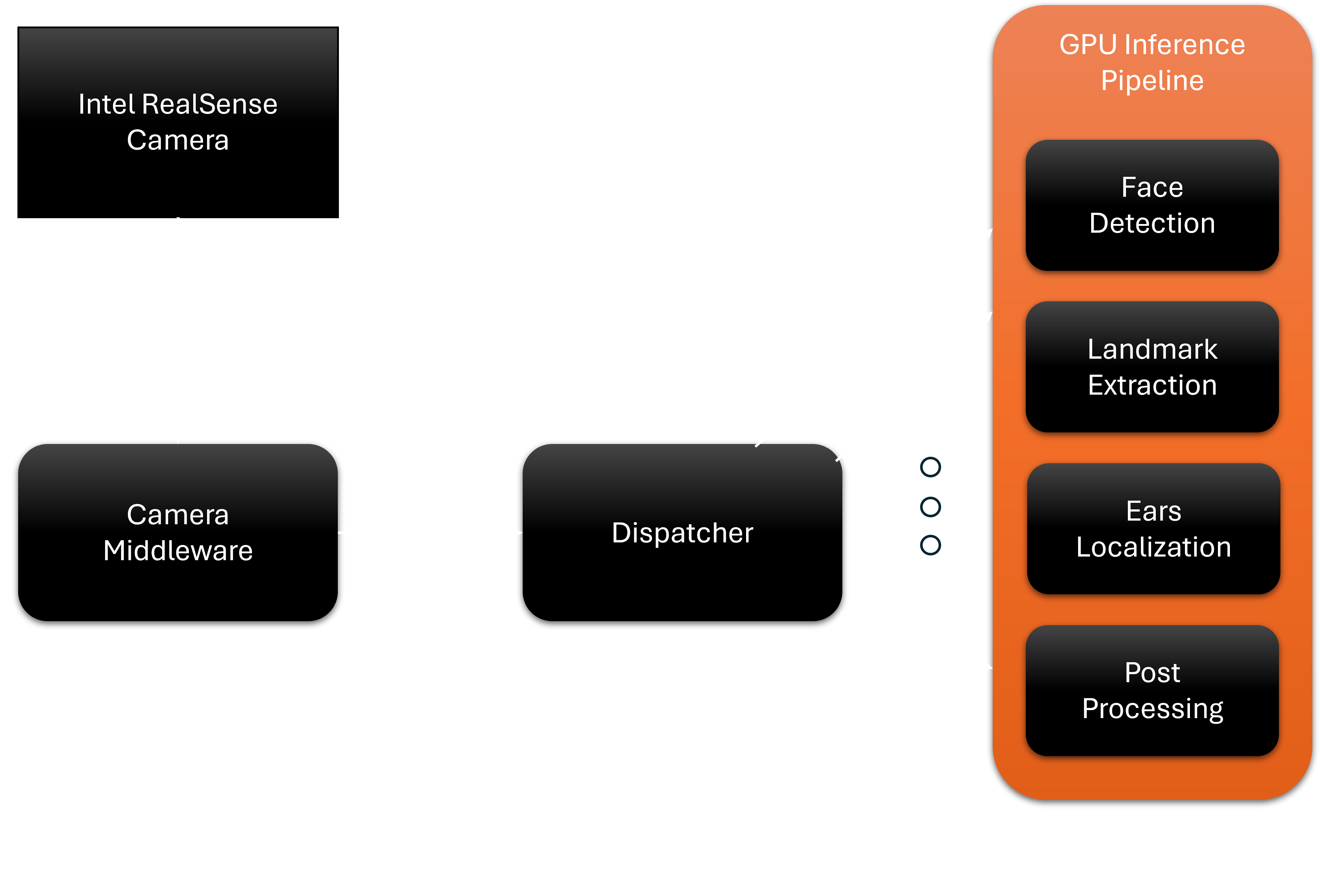

- Offload the heavy CPU utilization to the embedded GPU.

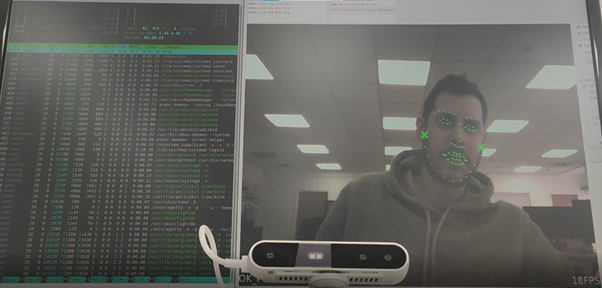

- Reduce the latency per frame and speed-up from an average of 10 FPS.

- Integrate the inference pipeline into an existing embedded product.

The Solution

- Training a Landmark extraction model.

- Model conversion and quantization.

- Development of a C++ infrastructure on HiKey970 board, that supports loading such models and running inferences on GPU.

GPU Inference Pipeline

This way we achieved x2 FPS and reduced CPU utilization!

Captured Frame

Technologies and Frameworks

C++ 14, CMake, ArmNN, MNN, OpenCV, Python, Pytorch.